Multithreaded Rendering

After working out a thread manager class that stores a stack of C++ command buffers, I've got a pretty nice proof of concept working. I can call functions in the game thread and the appropriate actions are pushed onto a command buffer that is then passed to the rendering thread when World::Render is called. The rendering thread is where all the (currently) OpenGL code is executed. When you create a context or load a shader, all it does is create the appropriate structure and send a request over to the rendering thread to finish the job:

Consequently, there is currently no way of detecting if OpenGL initialization fails(!) and in fact the game will still run along just fine without any graphics rendering! We obviously need a mechanism to detect this, but it is interesting that you can now load a map and run your game without ever creating a window or graphics context. The following code is perfectly legitimate in Leawerks 5:

#include "Leadwerks.h" using namespace Leadwerks int main(int argc, const char *argv[]) { auto world = CreateWorld() auto map = LoadMap(world,"Maps/start.map"); while (true) { world->Update(); } return 0; }

The rendering thread is able to run at its own frame rate independently from the game thread and I have tested under some pretty extreme circumstances to make sure the threads never lock up. By default, I think the game loop will probably self-limit its speed to a maximum of 30 updates per second, giving you a whopping 33 milliseconds for your game logic, but this frequency can be changed to any value, or completely removed by setting it to zero (not recommended, as this can easily lock up the rendering thread with an infinite command buffer stack!). No matter the game frequency, the rendering thread runs at its own speed which is either limited by the window refresh rate, an internal clock, or it can just be let free to run as fast as possible for those triple-digit frame rates.

Shaders are now loaded from multiple files instead of being packed into a single .shader file. When you load a shader, the file extension will be stripped off (if it is present) and the engine will look for .vert, .frag, .geom, .eval, and .ctrl files for the different shader stages:

auto shader = LoadShader("Shaders/Model/diffuse");

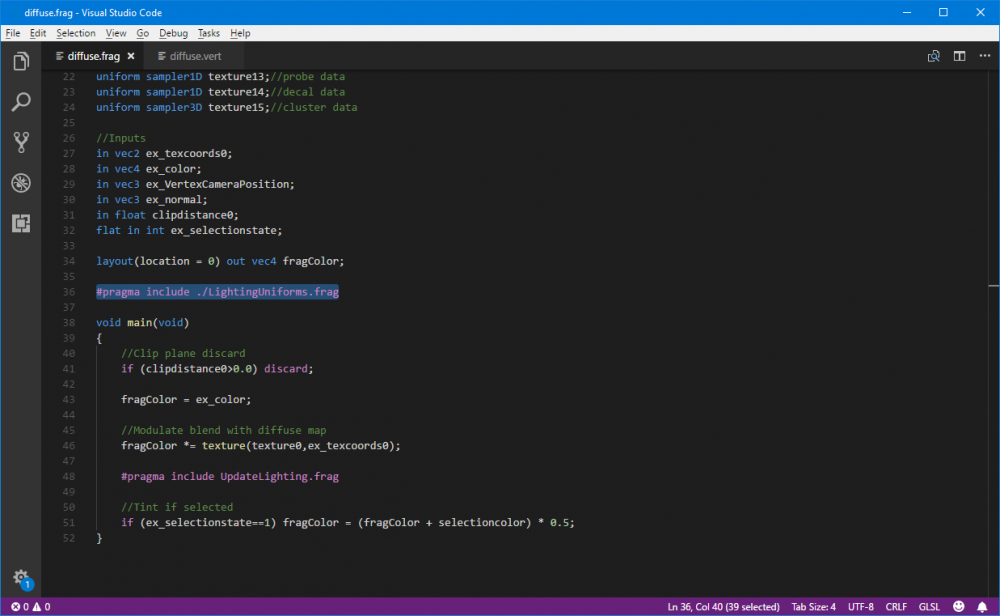

The asynchronous shader compiling in the engine could make our shader editor a little bit more difficult to handle, except that I don't plan on making any built-in shader editor in the new editor! Instead I plan to rely on Visual Studio Code as the official IDE, and maybe add a plugin that tests to see if shaders compile and link on your current hardware. I found that a pragma statement can be used to indicate include files (not implemented yet) and it won't trigger any errors in the VSCode intellisense:

Although restructuring the engine to work in this manner is a big task, I am making good progress. Smart pointers make this system really easy to work with. When the owning object in the game thread goes out of scope, its associated rendering object is also collected...unless it is still stored in a command buffer or otherwise in use! The relationships I have worked out work perfectly and I have not run into any problems deciding what the ownership hierarchy should be. For example, a context has a shared pointer to the window it belongs to, but the window only has a weak pointer to the context. If the context handle is lost it is deleted, but if the window handle is lost the context prevents it from being deleted. The capabilities of modern C++ and modern hardware are making this development process a dream come true.

Of course with forward rendering I am getting about 2000 FPS with a blank screen and Intel graphics, but the real test will be to see what happens when we start adding lots of lights into the scene. The only reason it might be possible to write a good forward renderer now is because graphics hardware has gotten a lot more flexible. Using a variable-length for loop and using the results of a texture lookup for the coordinates of another lookup ![]() were a big no-no when we first switched to deferred rendering, but it looks like that situation has improved.

were a big no-no when we first switched to deferred rendering, but it looks like that situation has improved.

The increased restrictions on the renderer and the total separation of internal and user-exposed classes are actually making it a lot easier to write efficient code. Here is my code for the indice array buffer object that lives in the rendering thread:

#include "../../Leadwerks.h" namespace Leadwerks { OpenGLIndiceArray::OpenGLIndiceArray() : buffer(0), buffersize(0), lockmode(GL_STATIC_DRAW) {} OpenGLIndiceArray::~OpenGLIndiceArray() { if (buffer != 0) { #ifdef DEBUG Assert(glIsBuffer(buffer),"Invalid indice buffer."); #endif glDeleteBuffers(1, &buffer); buffer = 0; } } bool OpenGLIndiceArray::Modify(shared_ptr<Bank> data) { //Error checks if (data == nullptr) return false; if (data->GetSize() == 0) return false; //Generate buffer if (buffer == 0) glGenBuffers(1, &buffer); if (buffer == 0) return false; //shouldn't ever happen //Bind buffer glBindBuffer(GL_ELEMENT_ARRAY_BUFFER, buffer); //Set data if (buffersize == data->GetSize() and lockmode == GL_DYNAMIC_DRAW) { glBufferSubData(GL_ELEMENT_ARRAY_BUFFER, 0, data->GetSize(), data->buf); } else { if (buffersize == data->GetSize()) lockmode = GL_DYNAMIC_DRAW; glBufferData(GL_ELEMENT_ARRAY_BUFFER, data->GetSize(), data->buf, lockmode); } buffersize = data->GetSize(); return true; } bool OpenGLIndiceArray::Enable() { if (buffer == 0) return false; if (buffersize == 0) return false; glBindBuffer(GL_ELEMENT_ARRAY_BUFFER, buffer); return true; } void OpenGLIndiceArray::Disable() { glBindBuffer(GL_ELEMENT_ARRAY_BUFFER, 0); } }

From everything I have seen, my gut feeling tells me that the new engine is going to be ridiculously fast.

If you would like to be notified when Leadwerks 5 becomes available, be sure to sign up for the mailing list here.

16 Comments

Recommended Comments